Author:

Isidor Buchmann, CEO & founder, Cadex Electronics Inc.

Date

07/20/2012

For simplicity, you can imagine the battery as an energy storage device analogous to a fuel tank. However, measuring stored energy from an electrochemical device is far more complex than that comparison suggests. While an ordinary fuel gauge measures liquid flow from a tank of known size, a battery fuel gauge has unconfirmed definitions and only reveals the OCV (open circuit voltage)—a reflection of the battery's SoC (state of charge). A battery's nameplate ampere-hour rating remains only true for the short time when the battery is new. In essence, a battery is a shrinking vessel that takes on less energy with each charge, and the marked Ah rating is no more than a reference of what the battery should hold. A battery cannot guarantee a quantified amount of energy because prevailing conditions restrict delivery. These are mostly unknown to the user and include battery capacity, load currents, and operating temperature. Considering these limitations, one can appreciate why battery fuel gauges may be inaccurate. The most simplistic method to measure a battery's SoC is to read its voltage, but this can be inaccurate. Batteries within a given chemistry have dissimilar architectures and deliver unique voltage profiles. Temperature also plays a role: Heat raises the voltage and a cold ambient lowers it. Furthermore, when the battery is agitated with a charge or discharge, the OCV no longer represents the true SoC reading and the battery requires a few hours of rest to regain equilibrium. Battery manufacturers recommend 24 hours. The largest challenge, however, is the flat discharge voltage curve on nickel- and lithium-based batteries. Additionally, load current through the battery's source impedance reflects as a voltage drop during discharge. Many fuel gauges measure SoC by coulomb counting. The theory goes back 250 years when Charles-Augustin de Coulomb first established the Coulomb Rule. It works on the principle of measuring in- and out-flowing currents (Figure 1).

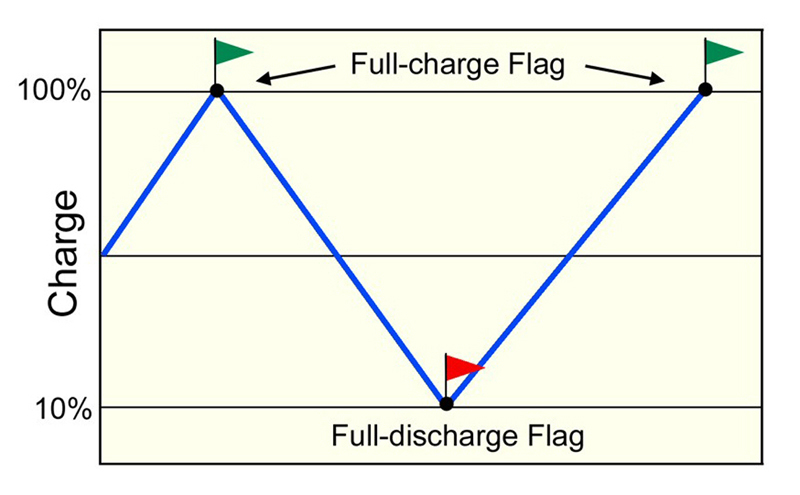

Theoretically, Coulomb counting should be flawless but, in practice, its accuracy is limited. For example, if a battery charges for one hour at one ampere, the same amount of energy should be available on discharge, but this is not the case. Inefficiencies in charge acceptance, especially towards the end of charge, and losses during discharge and storage reduce the total energy delivered and skew the readings. The available energy is always less than what the charging circuit had fed into the battery. For example, the energy cycle (charging and then discharging) of the Li-ion batteries in the Tesla Roadster automobile is about 86% efficient. A common error in fuel-gauge design is assuming that the battery will stay the same. Such an oversight renders the readings inaccurate after about two years. If, for example, the capacity decreases to 50% due to old age, the fuel gauge will still show 100% SoC on full charge but the runtime will be half. For the user of a mobile phone or a laptop, this fuel-gauge error may only cause a mild inconvenience. The problem becomes more acute, however, with an electric drivetrain that depends on precise predictions to reach the destination. A fuel gauge based on coulomb counting needs periodic calibration, also known as capacity re-learning. Calibration corrects the tracking error that develops between the chemical and digital battery on charge and discharge cycles. The gauging system could omit the correction if the battery received a periodic full discharge at a constant current followed by a full charge. The battery would reset with each full cycle and the tracking error would remain at less than 1% per cycle. In real life, however, a battery may discharge for a few minutes with a load signature that is difficult to capture, then partially recharge and store with varying levels of self-discharge depending on temperature and age. Manual calibration is possible by running the battery down to a Low Battery limit. This discharge can take place in the equipment or with a battery analyzer. A full discharge sets the discharge flag and the subsequent recharge fixes the charge flag (Figure 2). Establishing these two markers allows SoC calculation by tracking the charge between the flags. For best results, calibrate a frequently used device every three months or after 40 partial cycles. If the device applies a periodic deep discharge on its own accord, no additional calibration is necessary.

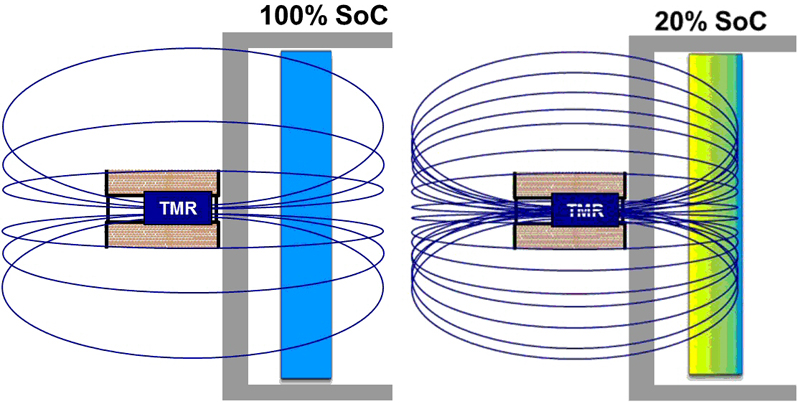

Calibration occurs by applying a full charge, discharge, and charge. This cycle can run in the equipment or with a battery analyzer as part of battery maintenance. If the application does not calibrate the battery regularly, most smart battery chargers obey the dictates of the chemical battery rather than the electronic circuit. There are no safety concerns if a battery is out of calibration. The battery will charge fully and function normally but the digital readout may be inaccurate and become a nuisance. To overcome the need for calibration, modern fuel gauges use learning by estimating how much energy the battery was able to deliver on the previous discharge. Learning, or trending, may also include charge times because a faded battery charges quicker than a good one. The ASOD (Adaptive System on Diffusion) from Cadex Electronics, for example, features a unique learn function that adjusts to battery aging and achieves a capacity estimation of ±2% across 1,000 battery cycles, the typical life span of a battery. SoC estimation is within ±5%, independent of age and load current. ASOD does not require outside parameters. When replacing the battery, the self-learning matrix will gradually adapt to the new battery and achieve the high accuracy of the previous battery. The replacement battery must be of same type. Researchers are exploring new methods to measure battery SoC. Such an innovative technology is quantum magnetism, which does not rely on voltage or current but looks at the battery's magnetic characteristics. For example, the negative plate on a discharging lead-acid battery changes from lead to lead sulfate, which has a different magnetic susceptibility than lead. A sensor based on a quantum-mechanical process reads the magnetic field through a process called tunneling. A battery with low charge has a three-fold increase in magnetic susceptibility compared to a full charge (Figure 3). Cadex's calls its implementation of this technology Q-Mag.

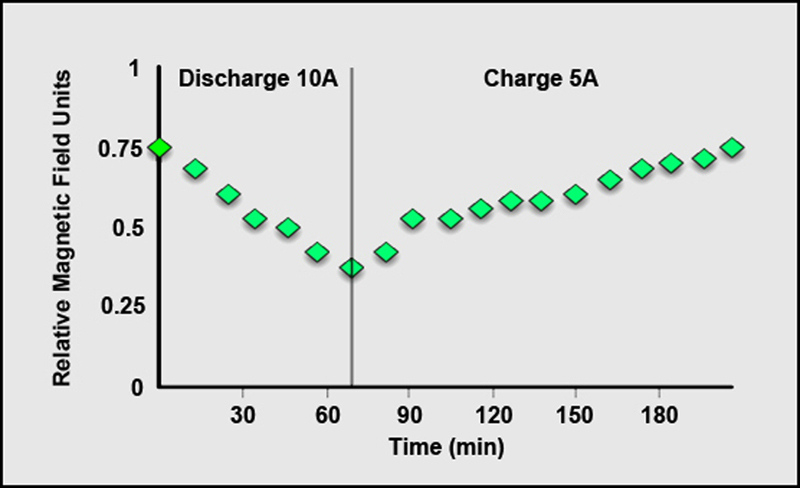

Knowing the precise SoC enhances battery charging but more importantly, the technology enables diagnostics that include capacity estimation and end-of-life prediction. However, the immediate benefit gravitates towards a better fuel gauge, and this is of special interest for Li-ion with flat discharge curves. Q-Mag-based measurements show a steady drop of the relative magnetic field units while discharging a lithium-iron-phosphate battery and a rise during the charge cycle (figure 4). The measurement data does not suffer from the rubber-band effect that is common with voltage-based measurements in which discharge lowers the voltage and charge raises it. Q-Mag reads SoC while the battery is charged or discharged. The SoC accuracy with Li-ion is ±5%, lead acid is ±7%; calibration occurs by applying a full charge. The excitation current to generate the magnetic field is less than 1mA, and the system is immune to most interference. Q-Mag works with cells encased in foil, aluminum, stainless steel, but not ferrous metals. www.cadex.com www.BatteryUniversity.com