Author:

Odd Jostein Svendali, Field Application Engineer, Atmel, Norway

Date

01/01/2012

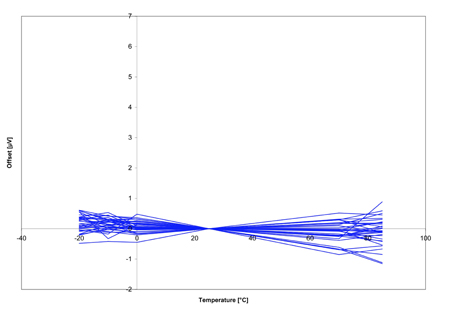

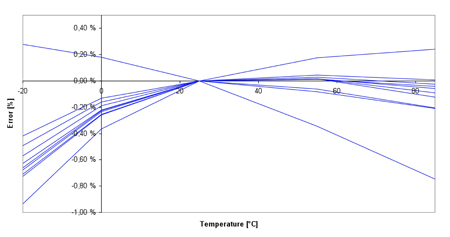

Lithium Ion batteries are becoming the technology of choice for most portable applications requiring rechargeable batteries. The advantages include high energy density per volume and weight, high voltage, low self-discharge, and no memory effect. When selecting a lithium Ion battery, it is important to manage it correctly to get safe operation, the highest capacity per cycle and the longest lifetime - normally by using a battery management unit (BMU). For safe operation, it is important that the BMU ensures that the battery cells operate within the manufacturer's specification in terms of voltage, temperature and current. When designing a battery management system, the worst-case conditions must be taken into account. One such example is the charge termination voltage - For standard notebook batteries for example, the battery cell voltage should never exceed 4.25V. Usually, the recommendation is to look at the standard deviation of the voltage measurement in the BMU, and subtract 4 times the standard deviation from the charge termination voltage. Thus, if a BMU measures the voltage at 4.25V with a standard deviation of 12.5mV, charging should cease at 4.2V. This conflicts with the desire to get the highest capacity from a given cell, where charging to a higher voltage will give higher capacity. Also to extend the battery life, it is important to avoid excessively high charge voltage and a too low discharge voltage. The wear on the cells is most evident when the cells are outside the recommended End of Charge Voltage (EOCV) and End of Discharge Voltage (EODV). The voltage measurement accuracy determines the required safety margin to the EOCV and EODV. The critical parameters to achieve voltage measurement accuracy over temperature are ADC gain drift, and voltage reference drift. The offset in the voltage measurement is typically less than 3μV compared to a measurement of 4200mV, and can thus be ignored in a practical design. The most efficient way to implement a gas gauge for a lithium Ion battery is to accurately track the charge flowing in and out of the battery. An accurate voltage measurement can be used to compensate for errors in the charge flow due to the fairly constant relationship between Open Circuit Voltage (OCV) and State of Charge (SoC). Some of the latest Lithium Ion cells have a very flat voltage characteristic, making it much more challenging to correct an error in the current measurement with an OCV measurement. A small error in the voltage measurement can lead to a significant error in the SoC calculation. Optimum accuracy is achieved with accurate current and time base measurements. The offset in the current measurement ADC can be reduced by measuring the offset in a controlled environment, and then subtract this offset from every measurement. But this does not take into account the drift of the offset. Figure 1 shows the remaining offset when using this technique for a number of parts. A better method has been implemented in Atmel's Battery management units. With the ATmega16HVA for instance, the offset can be cancelled out by periodically changing the polarity of the current measurement. With this method, a very small but fixed offset will remain. This can also be removed by measuring it before the protection FETs are opened, giving a known current flow through the battery pack. As can be seen from Figure 2, a significant improvement is achieved by using this method. The remaining error caused by offset drift in the Atmel BMUs is below the quantization level. The advantage of eliminating the offset is that current measurement at low level can be performed accurately. For devices with a large offset, it is necessary at some point to stop measuring and start predicting the current value. Some BMUs have a snap-to zero band or dead-band of up to 100mA using a 5m? sense resistor. This is still a significant current considering that a notebook for instance, can stay in a mode with this current for a very long time.

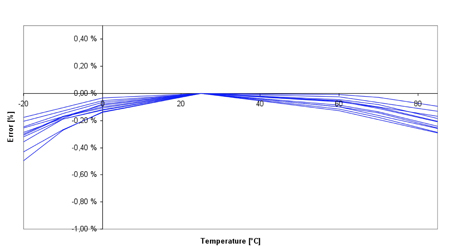

The current measurement ADC offset error limits the lowest current level that can be measured for a given sense resistor size. This leads to the important tradeoff between low sense resistor value and required dead band where the current level is too low to accumulate the charge flowing. Most equipment manufacturers are looking at ways to reduce current consumption, and stay in low power modes whenever possible, making it more important to ensure that small currents can be measured accurately. Measuring a voltage in the μV range accurately is challenging in itself and when the chip is experiencing temperature variation, the challenge becomes greater. Atmel's offset calibration method is proven to be very efficient when temperature effects are taken into account. As can be seen in Figure 2 the temperature effects are eliminated, ensuring that the offset is not a problem for the accuracy of the measurement. The bandgap voltage reference is a vital component to achieve high accuracy results. A deviation in the actual voltage reference value from the expected value in firmware will translate to a gain error in the measurement result. In most cases this is the most important error source for cell voltage measurements and measurements of high currents. A standard bandgap voltage reference combines a current that is proportional to absolute temperature (PTAT) with a current that is complementary to absolute temperature (CTAT) to give a current that is relatively stable over temperature. This current is run through a resistor to give a voltage that is relatively constant over temperature. However, since the CTAT shape is curved while the PTAT shape is linear, the resulting voltage curve over temperature is curved. The current levels in the bandgap reference have some production variation requiring factory calibration is to minimize the impact of this. An example of variation in an uncalibrated reference is shown in the following plot. The maximum variation within the temperature range -20 - 85°C is -0.9 - 0.20%. As indicated in Figure 3, two outliers differ significantly from the other devices.

Standard bandgap references commonly used in BM devices are calibrated for nominal variation, providing very good accuracy at 25°C. To achieve improved performance over temperature variation, Atmel adds an additional calibration of the voltage reference where the temperature coefficient of the bandgap reference is adjusted. This calibration step will adjust the shape and position of the curvature and provide significantly improved stability over temperature, as shown in the following plot, Figure 4. The maximum variation within the temperature range -20 - 85°C is in this case as low as -0.5 - 0.0%. Note that the second calibration step not only provides significantly improved accuracy, it also enables detecting and screening outliers that have significantly different temperature characteristics than normal devices.

The second calibration step is normally not performed for BM devices because of the added production test cost. The second calibration requires accurate analog test of packaged devices at two temperatures, while the industry norm is to test packaged devices at only one temperature. Adding a second test step with high analog accuracy requirements will normally give a significant cost increase. Atmel has a novel method for performing the second test step that minimizes the additional cost. Atmel's patented method minimizes the test equipment requirements by utilizing features that are present in the BM unit itself. The on-board ADCs are used to perform the measurements using accurate, external voltage references, the CPU is used to perform the necessary calculations, and the Flash is used to store the measurement data from the first test step. As a result, very cheap test equipment can be used, while still achieving very high accuracy results. This method allows Atmel to provide industry leading performance with very little added test cost. When the battery reaches a fully discharged or fully charged state, it is the voltage measurement that determines when to shut-down the application or stop charging the battery. The safety levels for maximum and minimum cell voltage cannot be compromised, so a guard band must be built in to ensure safe operation in all cases. The higher the guaranteed voltage measurement accuracy, the smaller guard band is required and more of the actual battery capacity can be utilized. For a given voltage and temperature, the voltage measurement can be calibrated, and the voltage measurement error in this condition will be very small. When taking the temperature drift into account, the main factor contributing to measurement error is the voltage reference drift. Figure 5 shows the uncertainty when using a standard voltage reference compared to a curvature compensated voltage reference. As can be seen the curvature compensation gives significantly improved accuracy.

High measurement accuracy is vital for getting the most energy out of the battery per cycle, and the longest battery pack lifetime without sacrificing safety. To avoid extensive costs for calibration, the inherent accuracy in the BMU must be as high as possible. Also by utilizing clever calibration techniques using onboard resources of the MCU good calibration, cancelling out temperature effects, can be achieved at a minimal cost.

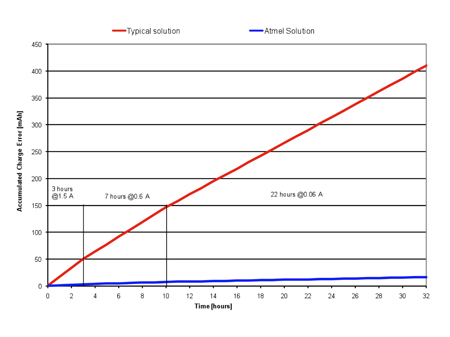

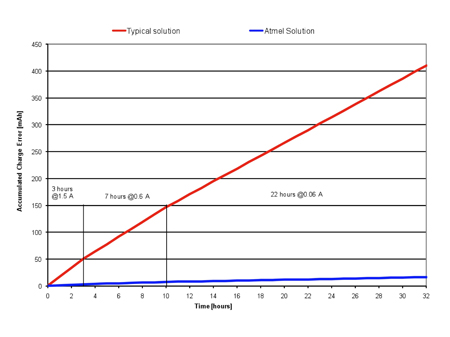

Figure 6 shows a discharge cycle of a 10Ah battery over 32 hours. 3 hours at 1.5A, 7 hours at 0.6A and 22 hours at 60mA. The variation in temperature is +/-10 deg C, and a sense resistor of 5mOhm is used. The error in the charge accumulation using a standard BMU with typical calibration methods is higher than 400mAh corresponding to more than 4% of the 10Ah battery in this example. Atmel's solution delivers a significantly better accuracy due to the clever analog design combined with patented calibration methods. Due to these improvements, the error is reduced to less than 20mAh, corresponding to 0.2%. www.atmel.com